[ad_1]

Within the present AI zeitgeist, sequence fashions have skyrocketed in reputation for his or her potential to investigate knowledge and predict what to do subsequent. As an illustration, you’ve doubtless used next-token prediction fashions like ChatGPT, which anticipate every phrase (token) in a sequence to type solutions to customers’ queries. There are additionally full-sequence diffusion fashions like Sora, which convert phrases into dazzling, reasonable visuals by successively “denoising” a complete video sequence.

Researchers from MIT’s Pc Science and Synthetic Intelligence Laboratory (CSAIL) have proposed a easy change to the diffusion coaching scheme that makes this sequence denoising significantly extra versatile.

When utilized to fields like laptop imaginative and prescient and robotics, the next-token and full-sequence diffusion fashions have functionality trade-offs. Subsequent-token fashions can spit out sequences that fluctuate in size. Nonetheless, they make these generations whereas being unaware of fascinating states within the far future — resembling steering its sequence technology towards a sure purpose 10 tokens away — and thus require further mechanisms for long-horizon (long-term) planning. Diffusion fashions can carry out such future-conditioned sampling, however lack the flexibility of next-token fashions to generate variable-length sequences.

Researchers from CSAIL wish to mix the strengths of each fashions, in order that they created a sequence mannequin coaching approach referred to as “Diffusion Forcing.” The identify comes from “Trainer Forcing,” the traditional coaching scheme that breaks down full sequence technology into the smaller, simpler steps of next-token technology (very like an excellent instructor simplifying a fancy idea).

Diffusion Forcing discovered widespread floor between diffusion fashions and instructor forcing: They each use coaching schemes that contain predicting masked (noisy) tokens from unmasked ones. Within the case of diffusion fashions, they regularly add noise to knowledge, which will be considered as fractional masking. The MIT researchers’ Diffusion Forcing technique trains neural networks to cleanse a set of tokens, eradicating totally different quantities of noise inside every one whereas concurrently predicting the following few tokens. The consequence: a versatile, dependable sequence mannequin that resulted in higher-quality synthetic movies and extra exact decision-making for robots and AI brokers.

By sorting by noisy knowledge and reliably predicting the following steps in a activity, Diffusion Forcing can support a robotic in ignoring visible distractions to finish manipulation duties. It might probably additionally generate secure and constant video sequences and even information an AI agent by digital mazes. This technique might probably allow family and manufacturing unit robots to generalize to new duties and enhance AI-generated leisure.

“Sequence fashions intention to situation on the recognized previous and predict the unknown future, a kind of binary masking. Nonetheless, masking doesn’t have to be binary,” says lead writer, MIT electrical engineering and laptop science (EECS) PhD pupil, and CSAIL member Boyuan Chen. “With Diffusion Forcing, we add totally different ranges of noise to every token, successfully serving as a kind of fractional masking. At check time, our system can “unmask” a set of tokens and diffuse a sequence within the close to future at a decrease noise degree. It is aware of what to belief inside its knowledge to beat out-of-distribution inputs.”

In a number of experiments, Diffusion Forcing thrived at ignoring deceptive knowledge to execute duties whereas anticipating future actions.

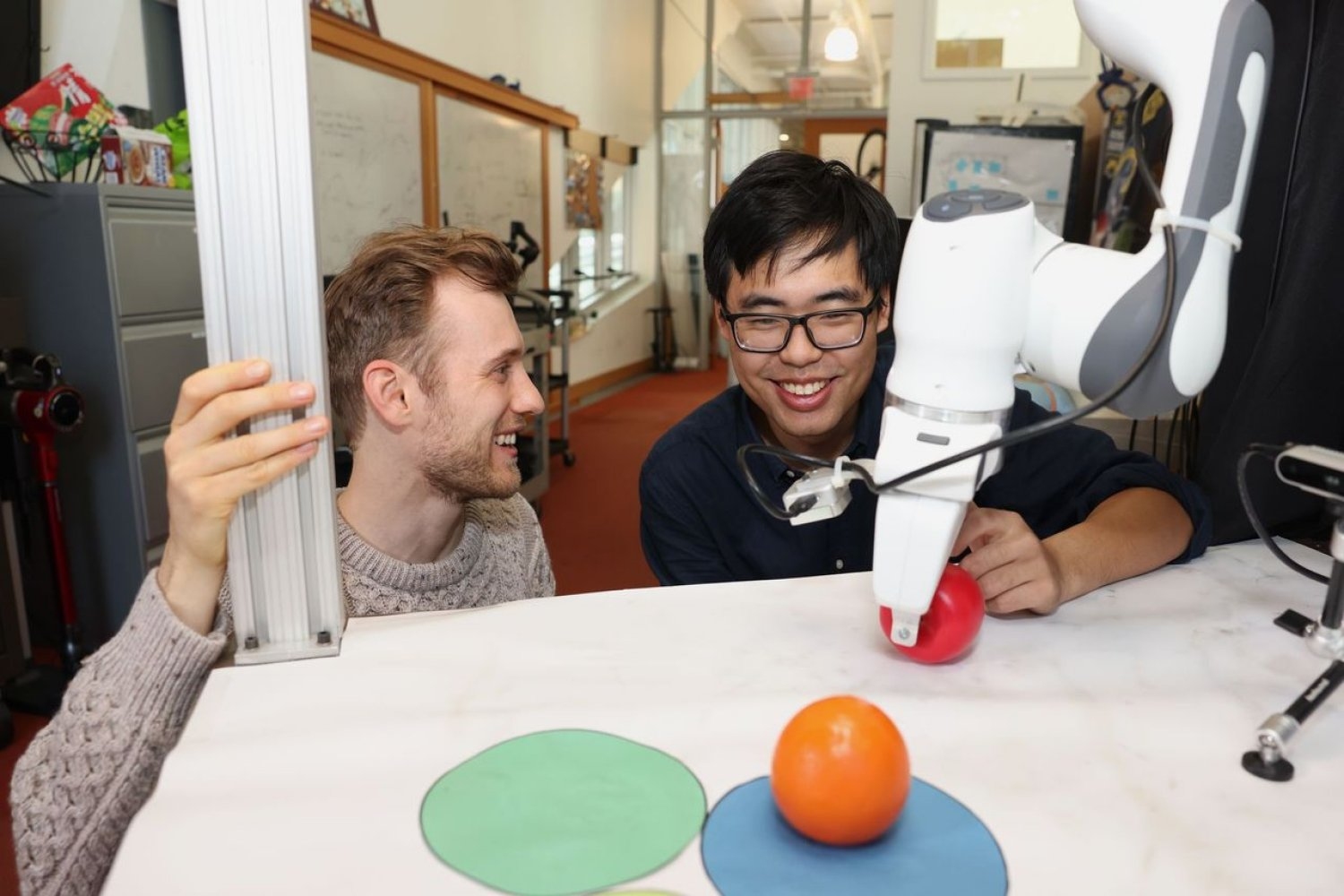

When carried out right into a robotic arm, for instance, it helped swap two toy fruits throughout three round mats, a minimal instance of a household of long-horizon duties that require recollections. The researchers educated the robotic by controlling it from a distance (or teleoperating it) in digital actuality. The robotic is educated to imitate the consumer’s actions from its digicam. Regardless of ranging from random positions and seeing distractions like a buying bag blocking the markers, it positioned the objects into its goal spots.

To generate movies, they educated Diffusion Forcing on “Minecraft” recreation play and colourful digital environments created inside Google’s DeepMind Lab Simulator. When given a single body of footage, the strategy produced extra secure, higher-resolution movies than comparable baselines like a Sora-like full-sequence diffusion mannequin and ChatGPT-like next-token fashions. These approaches created movies that appeared inconsistent, with the latter typically failing to generate working video previous simply 72 frames.

Diffusion Forcing not solely generates fancy movies, however also can function a movement planner that steers towards desired outcomes or rewards. Because of its flexibility, Diffusion Forcing can uniquely generate plans with various horizon, carry out tree search, and incorporate the instinct that the distant future is extra unsure than the close to future. Within the activity of fixing a 2D maze, Diffusion Forcing outperformed six baselines by producing sooner plans resulting in the purpose location, indicating that it may very well be an efficient planner for robots sooner or later.

Throughout every demo, Diffusion Forcing acted as a full sequence mannequin, a next-token prediction mannequin, or each. In accordance with Chen, this versatile strategy might probably function a strong spine for a “world mannequin,” an AI system that may simulate the dynamics of the world by coaching on billions of web movies. This might permit robots to carry out novel duties by imagining what they should do primarily based on their environment. For instance, in the event you requested a robotic to open a door with out being educated on how one can do it, the mannequin might produce a video that’ll present the machine how one can do it.

The group is presently seeking to scale up their technique to bigger datasets and the newest transformer fashions to enhance efficiency. They intend to broaden their work to construct a ChatGPT-like robotic mind that helps robots carry out duties in new environments with out human demonstration.

“With Diffusion Forcing, we’re taking a step to bringing video technology and robotics nearer collectively,” says senior writer Vincent Sitzmann, MIT assistant professor and member of CSAIL, the place he leads the Scene Illustration group. “In the long run, we hope that we will use all of the information saved in movies on the web to allow robots to assist in on a regular basis life. Many extra thrilling analysis challenges stay, like how robots can be taught to mimic people by watching them even when their very own our bodies are so totally different from our personal!”

Chen and Sitzmann wrote the paper alongside latest MIT visiting researcher Diego Martí Monsó, and CSAIL associates: Yilun Du, a EECS graduate pupil; Max Simchowitz, former postdoc and incoming Carnegie Mellon College assistant professor; and Russ Tedrake, the Toyota Professor of EECS, Aeronautics and Astronautics, and Mechanical Engineering at MIT, vice chairman of robotics analysis on the Toyota Analysis Institute, and CSAIL member. Their work was supported, partially, by the U.S. Nationwide Science Basis, the Singapore Defence Science and Know-how Company, Intelligence Superior Analysis Initiatives Exercise by way of the U.S. Division of the Inside, and the Amazon Science Hub. They are going to current their analysis at NeurIPS in December.

[ad_2]

Source link