[ad_1]

This code snippet demonstrates the right way to configure and use the jina-colbert-v1-en mannequin for indexing a set of paperwork, leveraging its capacity to deal with lengthy contexts effectively.

Implementing Two-Stage Retrieval with Rerankers

Now that we’ve an understanding of the rules behind two-stage retrieval and rerankers, let’s discover their sensible implementation throughout the context of a RAG system. We’ll leverage widespread libraries and frameworks to display the mixing of those methods.

Establishing the Atmosphere

Earlier than we dive into the code, let’s arrange our growth surroundings. We’ll be utilizing Python and several other widespread NLP libraries, together with Hugging Face Transformers, Sentence Transformers, and LanceDB.

# Set up required libraries

!pip set up datasets huggingface_hub sentence_transformers lancedb

Information Preparation

For demonstration functions, we’ll use the “ai-arxiv-chunked” dataset from Hugging Face Datasets, which incorporates over 400 ArXiv papers on machine studying, pure language processing, and huge language fashions.

from datasets import load_dataset

dataset = load_dataset(“jamescalam/ai-arxiv-chunked”, break up=”practice”)

<pre>

Subsequent, we’ll preprocess the information and break up it into smaller chunks to facilitate environment friendly retrieval and processing.

from transformers import AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained(“bert-base-uncased”)

def chunk_text(textual content, chunk_size=512, overlap=64):

tokens = tokenizer.encode(textual content, return_tensors=”pt”, truncation=True)

chunks = tokens.break up(chunk_size – overlap)

texts = [tokenizer.decode(chunk) for chunk in chunks]

return texts

chunked_data = []

for doc in dataset:

textual content = doc[“chunk”]

chunked_texts = chunk_text(textual content)

chunked_data.lengthen(chunked_texts)

For the preliminary retrieval stage, we’ll use a Sentence Transformer mannequin to encode our paperwork and queries into dense vector representations, after which carry out approximate nearest neighbor search utilizing a vector database like LanceDB.

from sentence_transformers import SentenceTransformer

from lancedb import lancedb

# Load Sentence Transformer mannequin

mannequin = SentenceTransformer(‘all-MiniLM-L6-v2’)

# Create LanceDB vector retailer

db = lancedb.lancedb(‘/path/to/retailer’)

db.create_collection(‘docs’, vector_dimension=mannequin.get_sentence_embedding_dimension())

# Index paperwork

for textual content in chunked_data:

vector = mannequin.encode(textual content).tolist()

db.insert_document(‘docs’, vector, textual content)

from sentence_transformers import SentenceTransformer

from lancedb import lancedb

# Load Sentence Transformer mannequin

mannequin = SentenceTransformer(‘all-MiniLM-L6-v2’)

# Create LanceDB vector retailer

db = lancedb.lancedb(‘/path/to/retailer’)

db.create_collection(‘docs’, vector_dimension=mannequin.get_sentence_embedding_dimension())

# Index paperwork

for textual content in chunked_data:

vector = mannequin.encode(textual content).tolist()

db.insert_document(‘docs’, vector, textual content)

With our paperwork listed, we are able to carry out the preliminary retrieval by discovering the closest neighbors to a given question vector.

from transformers import AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained(“bert-base-uncased”)

def chunk_text(textual content, chunk_size=512, overlap=64):

tokens = tokenizer.encode(textual content, return_tensors=”pt”, truncation=True)

chunks = tokens.break up(chunk_size – overlap)

texts = [tokenizer.decode(chunk) for chunk in chunks]

return texts

chunked_data = []

for doc in dataset:

textual content = doc[“chunk”]

chunked_texts = chunk_text(textual content)

chunked_data.lengthen(chunked_texts)

Reranking

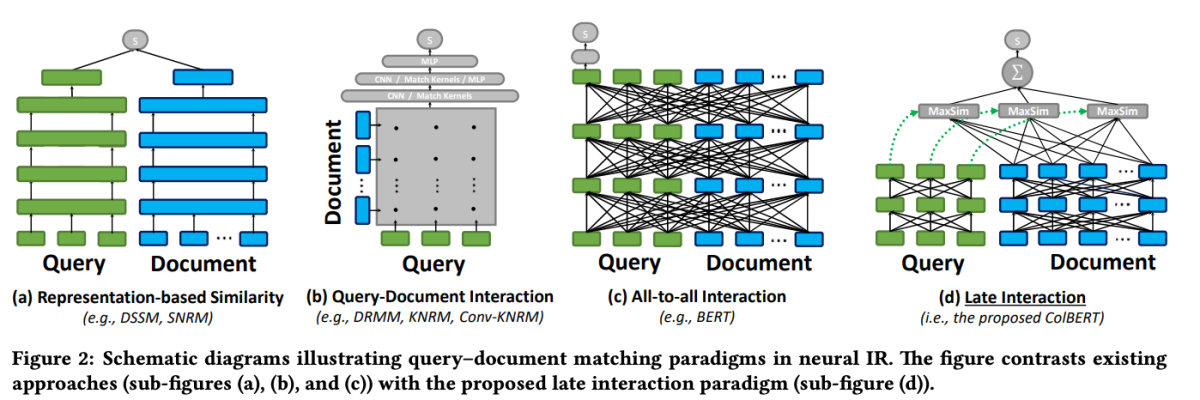

After the preliminary retrieval, we’ll make use of a reranking mannequin to reorder the retrieved paperwork primarily based on their relevance to the question. On this instance, we’ll use the ColBERT reranker, a quick and correct transformer-based mannequin particularly designed for doc rating.

from lancedb.rerankers import ColbertReranker

reranker = ColbertReranker()

# Rerank preliminary paperwork

reranked_docs = reranker.rerank(question, initial_docs)

The reranked_docs record now incorporates the paperwork reordered primarily based on their relevance to the question, as decided by the ColBERT reranker.

Augmentation and Technology

With the reranked and related paperwork in hand, we are able to proceed to the augmentation and technology levels of the RAG pipeline. We’ll use a language mannequin from the Hugging Face Transformers library to generate the ultimate response.

from transformers import AutoTokenizer, AutoModelForSeq2SeqLM

tokenizer = AutoTokenizer.from_pretrained(“t5-base”)

mannequin = AutoModelForSeq2SeqLM.from_pretrained(“t5-base”)

# Increase question with reranked paperwork

augmented_query = question + ” ” + ” “.be part of(reranked_docs[:3])

# Generate response from language mannequin

input_ids = tokenizer.encode(augmented_query, return_tensors=”pt”)

output_ids = mannequin.generate(input_ids, max_length=500)

response = tokenizer.decode(output_ids[0], skip_special_tokens=True)

print(response)

[ad_2]

Source link